blog

Applications running in EKS often require access to AWS resources, including S3 buckets, DynamoDB tables, Secrets Manager secrets, KMS keys, SQS queues, and other resources. As a security auditor, mapping EKS pods in a cluster to assigned IAM policy permissions can be challenging. In this post, we will review three different ways to audit EKS pod permissions.

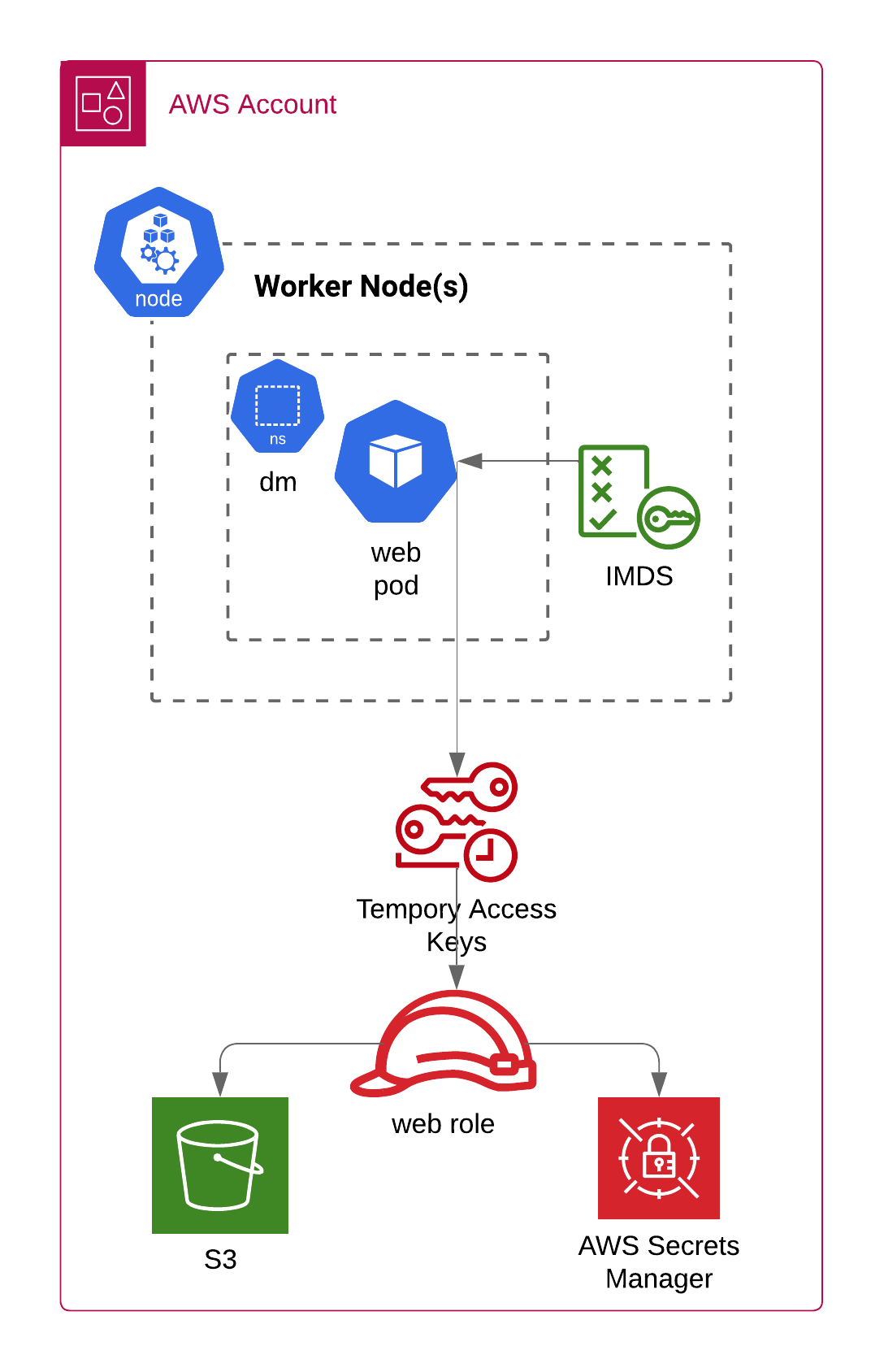

EKS avoids provisioning long-lived access keys to the cluster’s nodes by using an instance profile to pass IAM role credentials to the EC2 instance. Services running on the EKS node can request the role’s temporary access keys using the EC2 Instance Metadata Service (IMDS). By default, all pods running on the node can also communicate with the IMDS. This allows pods to request temporary access keys for the node’s IAM role and access AWS resources.

Consider the EKS node in the diagram below. The node’s kubelet makes AWS API calls for the Kubernetes control plane to function, which typically requires the AmazonEKSWorkerNodePolicy, AmazonEKS_CNI_Policy, AmazonEC2ContainerRegistryReadOnly, and CloudWatchAgentServerPolicy managed policies. Knowing the web pod needs access to an S3 bucket and a Secrets Manager secret, the appropriate permissions are attached to the node’s IAM Role as well.

The web pod’s path to accessing the AWS APIs is as follows:

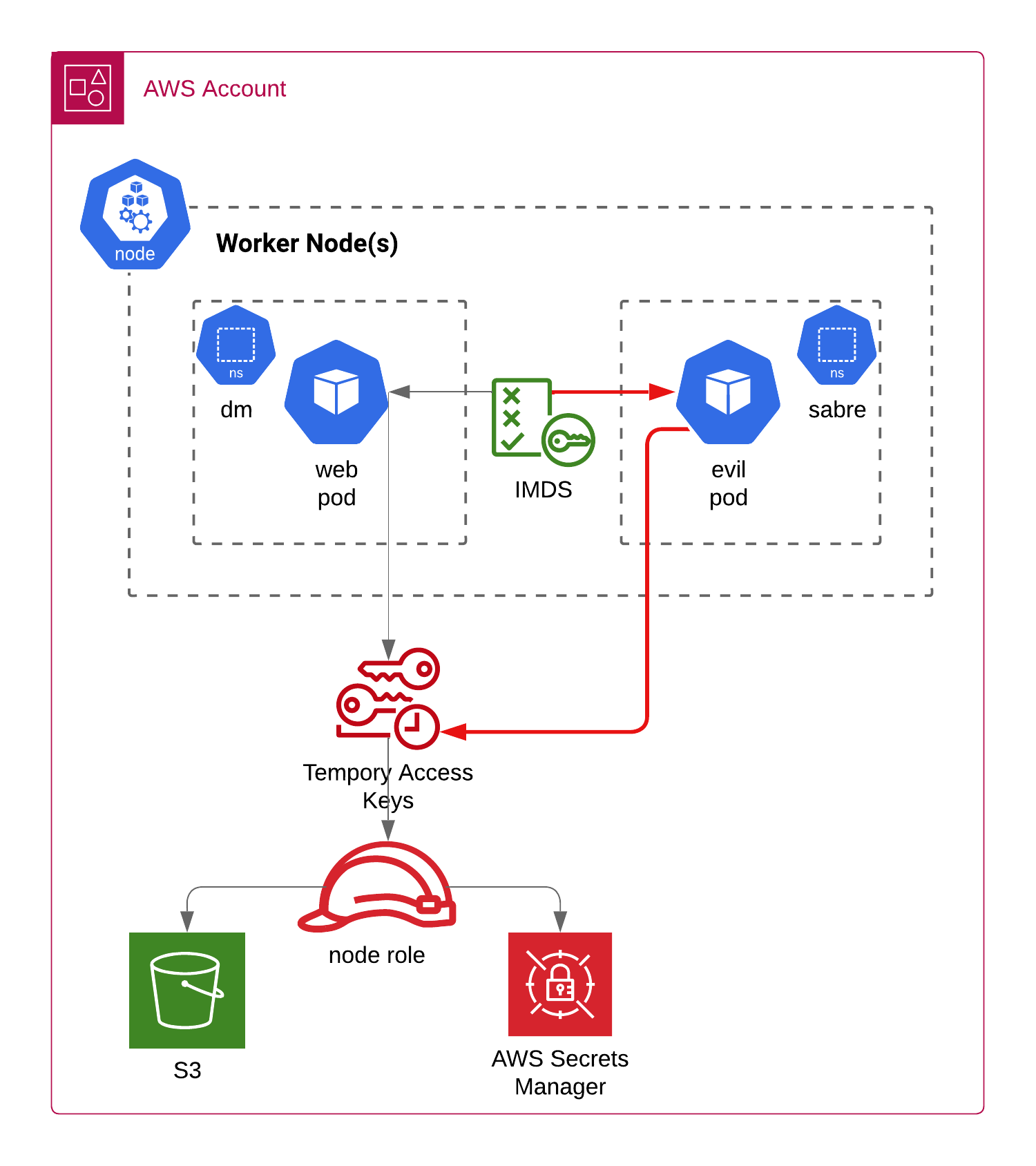

So, what’s the problem? Mixing the kubelet control plane permissions with permissions for other workloads can create privilege escalation issues. To start, the EKS node has a number of AWS managed policies, including the AmazonEKS_CNI_Policy managed policy, which could allow the web pod to create, delete, and attach network interfaces from the nodes in the cluster. These permissions are unlikely to be required for the web pod to operate.

Now, let’s consider a scenario where a new pod, evil, is deployed into a different namespace called sabre. The next diagram shows the evil pod requesting temporary credentials from the node’s instance metadata service. The temporary credentials will also include the web pod’s permission to the S3 and Secrets Manager!

Given the privilege escalation issues granting pod permissions at the node level, ensure your EKS administrators disable pod access to the node’s IMDS endpoint. This will avoid mixing node and pod level permissions, and make it much easier for auditors to understand an individual pod’s permissions. This can be done using either of the following methods:

Enforce IMDS version (IMDSv2) on the cluster nodes and set the response hop limit to one (1). This ensures that IMDS responses cannot be received by the pod’s network interface. The following Terraform configuration sets the appropriate IMDSv2 configuration on the node’s aws_launch_template resource.

# IMDSv2 w/ hop limit set to 1 disables pod access to the IMDS

metadata_options {

http_endpoint = "enabled"

http_tokens = "required"

http_put_response_hop_limit = 1

instance_metadata_tags = "enabled"

}

Blocking IMDS access cluster-wide could cause problems if pods running in the cluster actually need access to the IMDS. Applying a NetworkPolicy to namespaces in the cluster that should not have access to the IMDS can provide a more flexible alternative. The following Calico NetworkPolicy allows the evil pod outbound access to networks outside the cluster, but explicitly blocks access to the IMDS endpoint:

apiVersion: crd.projectcalico.org/v1

kind: NetworkPolicy

metadata:

name: egress-all

namespace: sabre

spec:

types:

- Egress

egress:

- action: Deny

destination:

nets:

- "169.254.169.254/32"

- action: Allow

destination:

nets:

- "0.0.0.0/0"

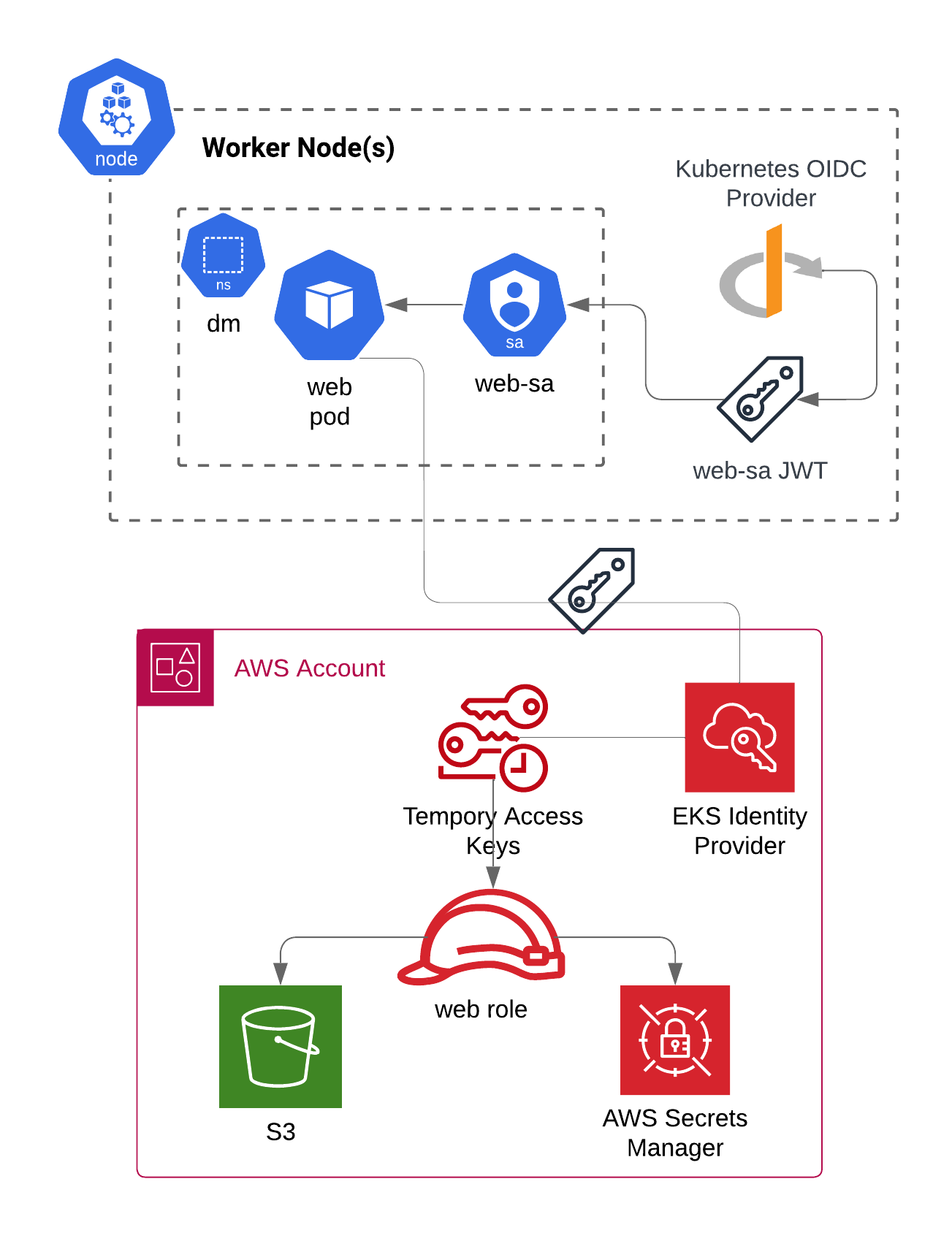

Now that the pods cannot inherit permissions from the EKS node, workloads will need an IAM Role assigned directly to the pod. In AWS EKS, the first option for granting pod-level access to the AWS APIs is called IAM Roles for Service Accounts (IRSA). IRSA uses the EKS cluster’s OpenID Connect (OIDC) identity provider to federate into an AWS identity provider and assume a role. The updated diagram below shows the new authentication flow:

The web pod’s path to accessing the AWS APIs is now:

All EKS service accounts using IRSA will contain the eks.amazonaws.com/role-arn annotation.

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn:

"arn:aws:iam::123456789012:role/dm-web-eks-pod-role"

name: "web-sa"

namespace: "dm"

Auditing IAM policies assigned to pods using IRSA can be done by enumerating all of the EKS cluster’s service accounts with the eks.amazonaws.com/role-arn annotation, parsing the IAM Role ARN, and then enumerating the IAM Role’s policy attachments. The aws-eks-audit-irsa-pods script uses the kubectl and aws command line interfaces to discover IRSA pod permissions:

#!/bin/bash

while IFS= read -r sa_metadata; do

service_account=$(jq -r .name <<<"${sa_metadata}")

namespace=$(jq -r .namespace <<<"${sa_metadata}")

role_arn=$(jq -r .rolearn <<<"${sa_metadata}")

role_name=$(jq -r '.rolearn | split("/") | .[1]' <<<"${sa_metadata}")

echo "Service Account: system:serviceaccount:${namespace}:${service_account}"

echo "Role ARN: ${role_arn}"

echo "Policy Attachments:"

aws iam list-attached-role-policies --role-name ${role_name} | jq -r .'AttachedPolicies[].PolicyArn'

echo ""

done < <(kubectl get serviceaccounts -A -o json |

jq -c '.items[] | select(.metadata.annotations."eks.amazonaws.com/role-arn" != null) |

{name: .metadata.name, namespace: .metadata.namespace, rolearn: .metadata.annotations."eks.amazonaws.com/role-arn"}'

)

The output shows the Kubernetes service account, IAM Role ARN, and the IAM policy attachments:

Service Account: system:serviceaccount:dm:web-sa

Role ARN: arn:aws:iam::123456789012:role/dm-web-eks-pod-role

Policy Attachments:

arn:aws:iam::123456789012:policy/dm-web-eks-pod-policy

Service Account: system:serviceaccount:kube-system:aws-load-balancer-controller

Role ARN: arn:aws:iam::123456789012:role/dm-eks-alb-controller-role

Policy Attachments:

arn:aws:iam::123456789012:policy/dm-eks-alb-controller-policy

Service Account: system:serviceaccount:kube-system:ebs-csi-controller-sa

Role ARN: arn:aws:iam::123456789012:role/dm-eks-ebs-csi-role

Policy Attachments:

arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy

Launched in late 2023, EKS Pod Identity offers an additional option for assigning permissions to pods. EKS Pod Identity is specific to EKS, and does not translate into other managed Kubernetes offerings (e.g., AKS, GKE). After installing the EKS Pod Identity Agent cluster add on, IAM Role to Kubernetes service associations are defined on the EKS cluster instead of the Kubernetes service account resource. For more details, see Christophe Tafani-Dereeper’s post titled Deep dive into the new Amazon EKS Pod Identity feature.

The following Terraform configuration sets an EKS pod identity association using the aws_eks_pod_identity_association resource. The resource creates an association between the sabre namespace’s web-sa service account and the dm_web_eks_pod IAM Role. As the pod is being created, the EKS Pod Identity mutating webhook will automatically inject the AWS_CONTAINER_CREDENTIALS_FULL_URI and AWS_CONTAINER_AUTHORIZATION_TOKEN_FILE environment variables. Processes running in the container can use the AWS_CONTAINER_CREDENTIALS_FULL_URI, which will have a value similar to http://169.254.170.23/v1/credentials, to request temporary credentials for the IAM Role. Invoking the endpoint requires the Authorization header to have the identity token found in the AWS_CONTAINER_AUTHORIZATION_TOKEN_FILE volume mount (/var/run/secrets/pods.eks.amazonaws.com/serviceaccount/eks-pod-identity-token).

resource "aws_eks_pod_identity_association" "dm_web_pod_identity" {

cluster_name = aws_eks_cluster.dm.name

namespace = "sabre"

service_account = "web-sa"

role_arn = aws_iam_role.dm_web_eks_pod.arn

}

Auditing IAM policies assigned using EKS Pod Identity can be done by enumerating all of the EKS cluster’s associations, finding the IAM Role, and then enumerating the IAM Role’s policy attachments. The aws-eks-audit-pod-identity-pods script uses only the aws command line interfaces to discover pod permissions. The kubectl command line interface is not needed because the associations live at the cluster level, not at the Kubernetes service account level.

#!/bin/bash

CLUSTER_NAME="$1"

while IFS= read -r pod_identity_assn; do

association_id=$(jq -r .associationId <<<"${pod_identity_assn}")

service_account=$(jq -r .serviceAccount <<<"${pod_identity_assn}")

namespace=$(jq -r .namespace <<<"${pod_identity_assn}")

association=$(aws eks describe-pod-identity-association --cluster "${CLUSTER_NAME}" --association-id "${association_id}")

role_arn=$(jq -r '.association.roleArn' <<<"${association}")

role_name=$(jq -r '.association.roleArn | split("/") | .[1]' <<<"${association}")

echo "Kubernetes Service Account: system:serviceaccount:${namespace}:${service_account}"

echo "Role ARN: ${role_arn}"

echo "Policy Attachments:"

aws iam list-attached-role-policies --role-name "${role_name}" | jq -r .'AttachedPolicies[].PolicyArn'

echo ""

done < <(aws eks list-pod-identity-associations --cluster-name "${CLUSTER_NAME}" | jq -c '.associations[]')

The output shows the Kubernetes service account, IAM Role ARN, and the IAM policy attachments:

Kubernetes Service Account: system:serviceaccount:sabre:web-sa

Role ARN: arn:aws:iam::192792859965:role/dm-web-eks-pod-identity-role

Policy Attachments:

arn:aws:iam::192792859965:policy/dm-web-eks-pod-policy

Auditing permissions assigned directly to EKS pods can be challenging and confusing with multiple options for creating associations between IAM Roles and Kubernetes service accounts. The audit scripts above are two helper scripts that live in my local bin directory for easily enumerating Kubernetes service accounts with access to the AWS APIs.

Datadog also maintains the Managed Kubernetes Auditing Toolkit (MKAT), which can be installed to perform similar permission checks.

Eric Johnson is a Co-founder and Principal Security Engineer at Puma Security and a Senior Instructor with the SANS Institute. His experience includes cloud security assessments, cloud infrastructure automation, cloud architecture, static source code analysis, web and mobile application penetration testing, secure development lifecycle consulting, and secure code review assessments. Eric is the lead author and an instructor for SEC540: Cloud Security and DevSecOps Automation and a co-author and instructor for both SEC549: Cloud Security Architecture, and SEC510: Cloud Security Controls and Mitigations.

Would you like to learn more about Cloud Identity and Workload Identity Federation? Contact us today: sales [at] pumasecurity [dot] com.